Ryan Laney & Teus Media

Ryan Laney has a long history of using technology to support storytelling for films and special interest projects. He recently completed work on the documentary feature Welcome to Chechnya where he worked with the filmmakers to develop and employ a novel technique to protect identities. He founded Teus Media to continue these efforts, building tools for journalists and documentary filmmakers. As a result of his work and achievements, this week Laney joined the Academy as one of its newest members.

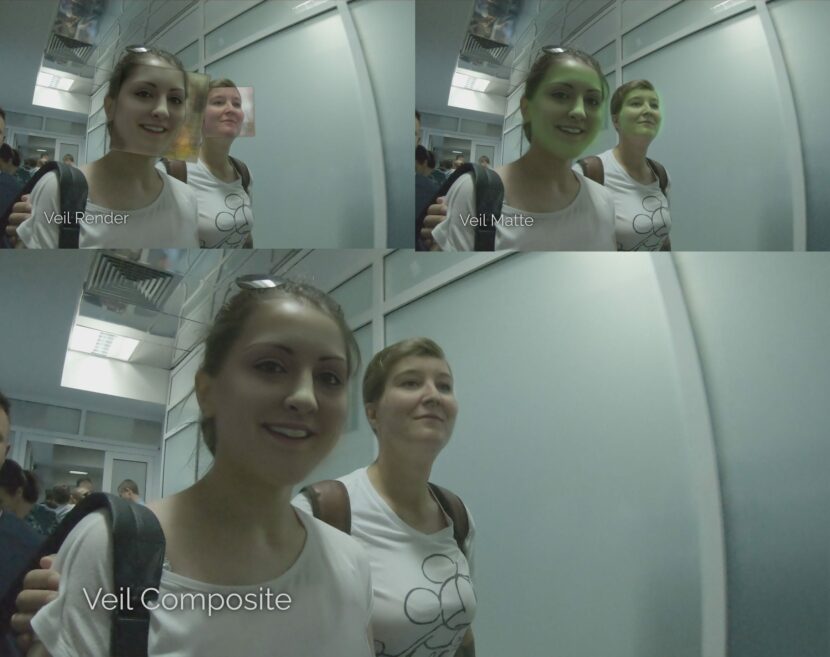

Welcome to Chechnya became the first documentary to ever be shortlisted for the Visual Effects Oscar. The film was directed by David France (2012’s Oscar nominee, How to Survive a Plague) and it chronicles violence against the LGBT population in the Russian republic of Chechnya. Because being exposed as gay is punishable, 23 individuals or witnesses seen in the film had their faces masked in post-production with the faces of volunteers or actors, in 480 shots. In total over 100 screen minutes were produced, with often times multiple people being treated in the same shot.

Getting Involved

Laney’s career includes working at ILM, Digital Domain, and Sony, he has worked on teams developing tools, workflows, and effects for summer blockbusters including Harry Potter, Green Lantern, and Spider-Man. More recently he has been designing software to help remove technical limitations for filmmakers and storytellers.

Laney and his team first got involved to help with about an hour of rotoscoping the filmmakers believed they needed to do to apply some sort of treatment onto the faces of the witnesses. Laney’s team experimented with Style Transfer to do this directly via neural rendering. This approach became the nucleus of the digital veil or ‘censor veil’ technique that the team would end up using in the film.

https://player.vimeo.com/video/559797645?dnt=1&app_id=122963

ML/DL

Transferring the style from one image onto another can be considered a problem of separating separate image content from style. The approach was first introduced in a paper Image Style Transfer Using Convolutional Neural Networks, in 2016. This process was made popular by showing an image inferred in a range of different painting styles. In this case, it is easy to understand that the content remains the same but the style of its visualization changes. Laney initially explored this as a way to keep the same expression of the witness but change the look of their face. In their tests, they tried rendering the new face in an abstracted cartoon style. The problem was that a caricature of a person actually does not mask their identity well. You still know who someone is from a cartoon version of them. It also could be argued that this version may not have been in keeping with the serious nature of the documentary.

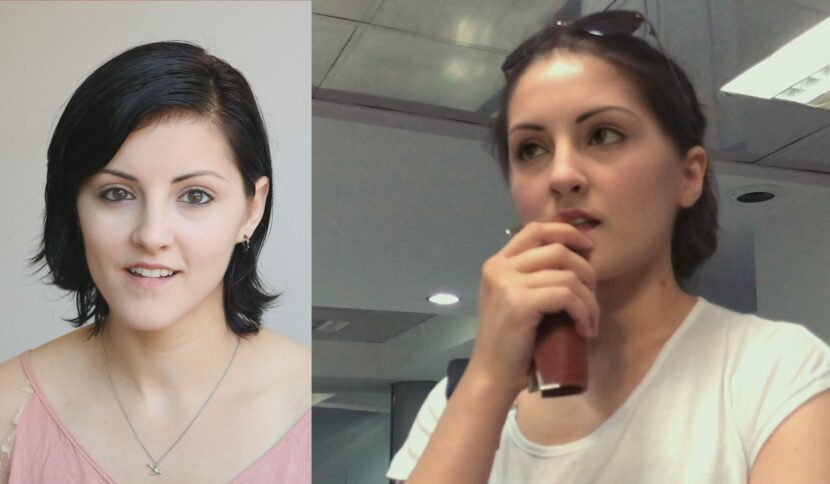

The solution was to swap the witness’s face with that of an actual actor. The principle of such a transfer is a correlation between two faces. Data sets of both faces are required, ie. both the witness and the actor, and whose face the audience will only ever see.

This application is different from many ‘deepfake’ projects or neural renders where the face of a famous person is applied to an actor. Specifically, in the typical ‘deepfakes’ approach, the face that is being replaced is filmed under control conditions and the ‘famous’ face is inferred from a compile of images and clips scrapped from the internet. In Welcome to Chechnya the reverse is true. The witnesses and their base footage was shot in documentary style without VFX control or special controlled lighting etc, and the sampled face of the volunteers or actors were able to be shot in controlled conditions.

We spoke to Ryan Laney to compile a set of tips and techniques for doing this style of neural rendering and AI. The typical process for using Neural Rendering involves

- Preparing and cleaning the data,

- Evaluating and tuning the model &

- Putting the model into production.

Shooting the right training data, the right way:

It is always better if you can shot your own training data

Laney and the team shot the actors under controlled conditions. First, they tested shooting the actors on a white screen but then they moved to film the actors on blue screen. This was because they found by pre-comping the training footage of the actor over an approximately matching background helped the process.

The rule of thumb was that as soon as a person’s head turns away from looking directly to the camera, and their far side ear is no longer visible, it was worth compositing the training data actor over a relevant background. “As they turn and then you have skin directly over the background, that is where all this extra work comes into play”, explains Laney. “Especially when the head turns even more and their eye or eyelash comes over the background,.. and this is where you will see missing in most examples of Neural Networks.” The problem stems from a first-order gradient issue, as even with the crop, half the frame is background either lighter or darker than their skin color. “If I have shot my face double over say white, and I am working with a shot over a dark background, for example, if they are heavily front-lit, then the gradient would be backwards and your face actually flips (horizontal).” To find the correct background the team took effectively an average of the real background.

The right training footage matters a lot.

As a general rule, Laney shot a lot of material to be used as training material but ‘more does not equal better’ when it comes to neural rendering. Laney found that a key step was focusing on selecting the right training clips. From the large library of possible training material for any witness face replacement, only a carefully curated sub-set was used. The process deployed several machine learning tools to keep track of the training data and automatically produce a set of frames/clips based on matching head angle, color temperature, and lighting. Naturally, there was person-specific training, but also often sequence-specific and even shot-specific training data. pulled from the master database using a NumPy (Euclidean) distance, ‘big table’ lookup approach based on the data set’s face encoding. The face encoding was based on face angle and expression.

A range of motion (ROM) and pangrams were better than trying to get the actors to match emotion.

The training data system had levels of categorized data. For each witness, there were actor-specific groupings of training data, and then there were often scene-specific categories and even shot-specific groupings. In other words, the frames used to train on could vary on a shot-by-shot basis, depending on the raw lighting and camera position of the documentary footage.

When filming the actors the team encouraged the actor to move their heads, perhaps in a circular motion, not so fast there is motion blur but certainly, the team did not want the actors to remain still as they were filmed. One important aspect Laney found was to ask the actors to also tilt their heads side to side. “We get a whole range of great lighting variation as someone twists their head, and that lighting variation is really important, and it just doesn’t matter that their head is not vertical in frame.”

It was not key to emote appropriately in the training session. In capturing the training data, Laney did not find it useful to try and get the actors to specifically act out any particular performance, or record them acting any specific emotions. Rather a range of motion, expressions, and spoke dialogue was ideal, and specialist ML programs solved the rest, by selecting the most likely useful subset from all the possible footage and image options. This used a separate pre-face swapping ML program that just focused on training data preparation.

One needs to be careful to not bias the training data

Each actor was filmed with 9 cameras placed around them to capture multiple angles of the same performance. This was done with some thought that photogrammetry might be needed. The project never did use the camera’s multiple clips for that purpose. During the setup of the cameras, the actual shutter control could not be controlled synchronously. As a result Ryan just let the cameras run and they did not cut between takes. This resulting in training footage of both the deliberate takes and the random in-between recording of the actors talking to the director, who was off to one side. The result was that the training data as a whole had a bias to one side of the actor’s face, (the side shown to the cameras when the actor turned to talk to the director). Ryan points out this is exactly the sort of unbalanced or training data bias that one needs to avoid.

In Welcome to Chechnya, they avoided this by using their ML pre-training data sorting. The team could have manually edited all the footage, but there was a huge amount. Instead of editing and then labeling all the training data, Laney wrote a subsystem to facial encode the documentary witness footage and then find similar takes in the training data – thus the training data sets were built up from the ML pre-pass take selection process. This led to tight coupling and allowed strong attention to the training data that would be used.

Matching lighting

Before the training even starts, the detailed pre-processing works to match head position, expression, and lighting between the actor and the witness. Not only was it important to have training footage with a good range of lighting directions, side, top, etc, but the process also worked best when the color temperature of the footage was able to be matched. It is important to note, one does not need to have perfect matches, but the better the training data matches the more likely the end results will succeed.

Auto-white balancing (auto-exposure) was used on many of the documentary cameras. To work with this range of footage, the pipeline solution was to find the principal colors in the shot using K-means clustering and then normalize the faces before running the veiling process. This helped get the best skin tone match for the two people on either side of the effect (inference).

k-means clustering is a method of vector quantization, originally from signal processing, that aims to partition n observations into k clusters in which each observation belongs to the cluster with the nearest mean (~average), serving as the basic core ‘example’ or prototype of the cluster.

Body types – the fullness of cheeks were more important than chins

Ryan found that chins were easy to morph, so in casting the actors he found having the right fullness around the cheek area was more important than the jawline. The actors did not need to look the same but it helped enormously to have the temples and cheeks similar. “The thing about jaws is that they are easy to warp, .. it is easier to move a jaw than take 30 pounds off their face.”

While not a body type issue, the lenses that shoot the faces affect the perception of the faces relative proportions. In the extreme case, a wide-angle lens will make a nose seem much larger relative to the eyes than a 200mm lens would filming the same face.

A key blend place is the hairline and in particular the temples. People have very different hairlines, and getting the hair transition line on the side of the head, especially true for short-haired witnesses, proved a key aspect of selling a blended face.

Documentary raw footage pre-processing

The witness footage was also pre-processed.

As the witness footage was shot ‘in the wild’ it has a lot of naturally occurring issues and visual issues that would cause ML problems. Some backgrounds could ruin face tracking and inference. In particular, if the background was varying high contrast, it could be a problem, such as when one of the witnesses was filmed walking past a black and white fence. The majority of the face worked well, but the nose and edge line of the face was unstable. To solve this, the varying background was averaged and the witness footage pre-comped to produce a more even tone or lower frequency background. This was then reversed once the face was solved, back to the original background.

Tracking and partitioning

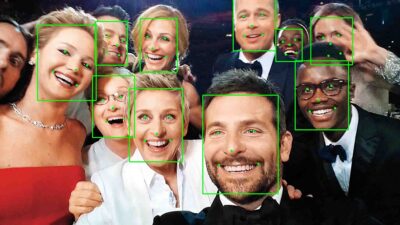

All the footage was tracked and for shots with multiple witnesses in a shot, where all their faces had to be swapped, the pipeline relied on ML tracking each actor. AlexNet was used to track multiple faces in the same shot. AlexNet is the name of a fast GPU convolutional neural network (CNN), designed by Alex Krizhevsky for object recognition/person identification. AlexNet is an incredibly powerful CNN capable of achieving high accuracies on very challenging datasets. However, it does not work well on side profile shots. For those shots a simple optical flow was used to predict where a person would be in the next frame, “it was one of those solutions that I just hacked together, to be honest,” Laney jokes.

Stabilizing and treating

All faces were tracked and centered as a part of the process. The tracker is an MTCNN tracker Multi-Task Cascaded Convolutional Neural Network which uses a neural network to detect faces and facial landmarks in images. As with most Teus Media’s tech, the approach was only published in the last few years, (See Zhang et al. 2016). The witness footage could also be segment mapped. “We use optical flow to make sure our tracks are tight, and optical flow picks up background movement. So any time the foreground and background move in opposite directions it can make life difficult for optical flow. We got around this by the use of segmentation maps to remove the background to help get a clean optical track. We could also a rough approximation of the segment map to say ‘only give me the flow of the pixels inside the masked area’,” explains Laney.

Footage complications

Higher resolution

Faces leaving frame, being cut off, or shot in extreme closeups posed interesting resolution problems.

In a few shots, the camera filmed the witnesses in extreme closeup. In these special cases of extreme close-ups, it meant doing the face inference at a higher resolution using custom SuperResolution ML techniques. As Welcome to Chechnya was a UHD master, if the face filled frame and the brow was cut off, “we were looking at 3 or 4K from chin to brow,” Laney explains. To solve this, he down-resed some frames of that specific person, thus creating both higher and lower resolution training data of the same image. Laney then trained on that specific data to produce a model uniquely trained to that one person in that specific lighting setup. This makes it more effective than an off the shelf up resing tool that needs to work on any face or any image. More specifically, once the right matching clips of the actor are selected as before, the actor’s face, which was filmed at 4K, is down resed to 1K. The master censor veil/face swap always happened at 1K resolution. After the down-res process, there are 2 accurate sets of training data that will allow a custom shot-specific AI model to train on going back: 1K to 4K. That AI model now knows how to do great upresing of one unique face and thus it can be used when the same actor’s face has face swapped onto the witness. The computer knows how to go from 1K to 4K on this specific face, which is, of course, now in the new composite. The approach uses a ‘VGG-16 like’ convolution network.

Cigarette Smoke and other odd occlusions

For some shots, the witness was smoking. Unsure what the computer would make of this, the team processed the clips and got out a smoke-like movement across the faces of the witnesses, but incorrectly inferred in human skin colors. As interesting as this ‘pink smoke’ might be, it was not useable. Instead, the decision was made to replace the face using surrounding frames, and provide the computer with smoke-free face frames using techniques such as optical flow, and then digital smoke was added back on top at the end of the censor veil process. Most of the optical flow was a python-driven optical flow using OpenCV to find the vectors, and there was also some Foundry Nuke used paint vectors used as well as video in-fill/content aware-fill.

Material that is noisy or grainy, also compression artifacts

A range of cameras was used when filming the raw documentary footage. The approach was to train on the grain or noise, remove it, process the clip, and then use ML to reintroduce correct ‘trained’ noise that perfectly matched each camera type.

Lack of training material

As the witnesses were not shot for VFX, Laney had to rely on having enough material from the documentary crew to train with, and for most witnesses, this worked well. But for some witnesses that were at the edge of the storytelling, and thus who did not feature, Laney had very little witness training material.

People in the film who did not have many shots required a special grouping with a special training set that included different people’s faces. “I pulled the other faces from the most similar witnesses or actors and then trained the inflated set on top of the closest good model of a similar witness-actor model,” he explains. “We basically filled out the data for the more general model. The model is propagated down to the shot level in the same way and then overfit to the shot-frames. In hindsight, this was an overbuild. Because of the shot-frames to actor-data lookup, the training for a shot is only ever the angles that already match and transfer learning off already-trained similar-pairs, worked just fine.”

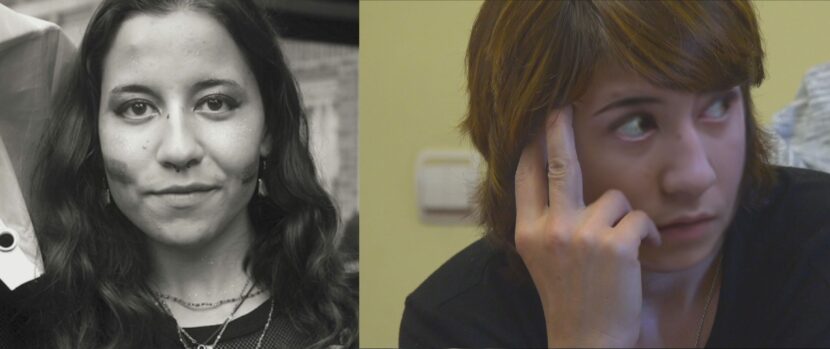

Working with Children

There was a minor in the film, so Laney and the team decided to try and de-age their actor to use as the source for the face swap onto the child. The first attempt used Flickr Faces and Style-Gan for a test. The Flickr Faces dataset consists of 70,000 high-quality PNG images at 1024×1024 resolution and contains considerable variation in terms of age, ethnicity, and image backgrounds. (The images were crawled from Flickr, with permission). However, no public databases were used for the actual film (or any of Teus media projects) since the approach failed.

In those early tests Laney found that “before the age of 10, most images are face on (looking at the camera), which reduces their broad usefulness. StyleGAN also works for stills, but Laney is yet to find a way to get a temporally consistent face using this particular approach.

StyleGAN is a method of using a generative adversarial network (GAN) introduced by Nvidia researchers in 2018/ 2019. StyleGAN and StyleGAN 2 can produce a face that is remarkably realistic based on a statistical AI inference built on a wide training data set. The output face looks incredibly real but is unique and yet fully synthetic. But given it would not remain stable, “the latent space is very sensitive, and so we haven’t found a way yet to get a consistent look out of it as the face moves,” he explains. Laney found himself having to start with a non-AI approach for the de-aging. “I took the facial landmarks and created a function to them as if they were in 3D space and then that drives a mesh warp that moves the face,” he explains. The hero actress was actually well into her thirties. Once this actress is de-aged, this younger version is used in exactly the same way as all the other actors and witnesses. It is important to note, this younger version of the hero actress, while looking consistent at different angles, does not need to look like anyone in particular. Laney is not de-aging the actress to a version that the actress may have looked like before in her life. For the censor veil process to work, the witness just needs to look like someone other than themselves, but not anyone in particular. The principal aim is to maintain the emotional authenticity of the witness while hiding her real identity.

By virtue of some casting issues, Laney actually did the process twice, with two different hero actresses veiling the same child witness. What was fascinating to all was to compare the two results. While they looked different, they seemed to have exactly the same expression. Laney commented that “we felt like this was inadvertently a perfect test as it showed we have detangled the expressions of a specific face from the underlying emotions, and that is a very exciting thing.”

Even with the best-laid plans…

It is often said this style of AI is a ‘black-box’, in the sense that we don’t really exactly understand how the various ML tools are specifically solving an individual example. For a few shots, even with all the careful pre-filtering and sorting, Laney just had an unexpectedly hard time getting to work well. Here are a couple of “why the heck was that so hard” kind of shots, “On this first shot, the network had an incredibly tough time resolving the screen-left eye. As a result, the shot never looked quite right to me,” he explains.

Lessons learned and the future

Ethics

To maintain a clear ethical journalistic presentation, the base face is blurred prior to the final composite, thus flagging subtly where a face has been treated.

The Future

Laney has a vision for the future, “I like the idea of not just building one (ML) model to rule them all, I want people to understand that the Neural Network is like a compositing tool and Tensorflow is like Nuke. So if I want to do this thing, then I can use this general-purpose tool, but I can use it in different ways. To continue with the analogy of Nuke, most compositors, only use 5 or 10 nodes regularly but they use them in different ways. And they can combine them in special ways and create their own nodes. But I feel there has to soon be a Nuke-like tool for building Networks to do special-purpose (ML) things.” Laney points to how Houdini built a node-based graph tool, for simulations. Sidefx does not build the simulation, they build the network that the information will flow through.

Teus Media makes face replacements and their censor veil available on a budget hitherto unheard of. Laney specifically wants to help documentary filmmakers and has already helped a huge range of people tackling many social and human rights projects.

Ryan Laney is also talking at DigiPro and Pipeline conference later this month.

Note in this article, only one witness, Maxim Lapunov, is shown untreated, as he went public in the film. No other witness’s identity is disclosed here.